If you've done a number of analysis projects in Dataiku, you've likely come across the scenario where you want to "package" a series of recipes to create a reusable process that you can apply to other similar datasets. Doing a "copy" of the recipes works but if you then want to update any piece of your prep, you would have to maintain that change in multiple locations, leading to a consistency nightmare. With Dataiku's "app as recipe" functionality, it's possible to easily package multiple recipes into a single plugin-like application that makes it easy to re-use those steps throughout your team's projects.

Setting Up Dataiku's App as Recipe

For this tutorial, we start by downloading one of the well-known Kaggle datasets from the Titanic prediction competition. Specifically, we're going to start by using the train.csv dataset from this page and upload it to a new Dataiku project called "Titanic Prep".

The Repeatable Task

As with any data science challenge, one of the first steps we likely want to perform is clean up and feature creation on the data we're working with. In the next step, we create a new prepare recipe that drops all of the rows from the Titanic set without values in the Age column and also creates a new "Age_Bin" column that bins the age data in fixed-size intervals of 5 as shown below:

With that prep step complete, let's use the output to create a new grouping recipe that groups by our new Age_Bin column and aggregates the Fare and Survived columns as shown below:

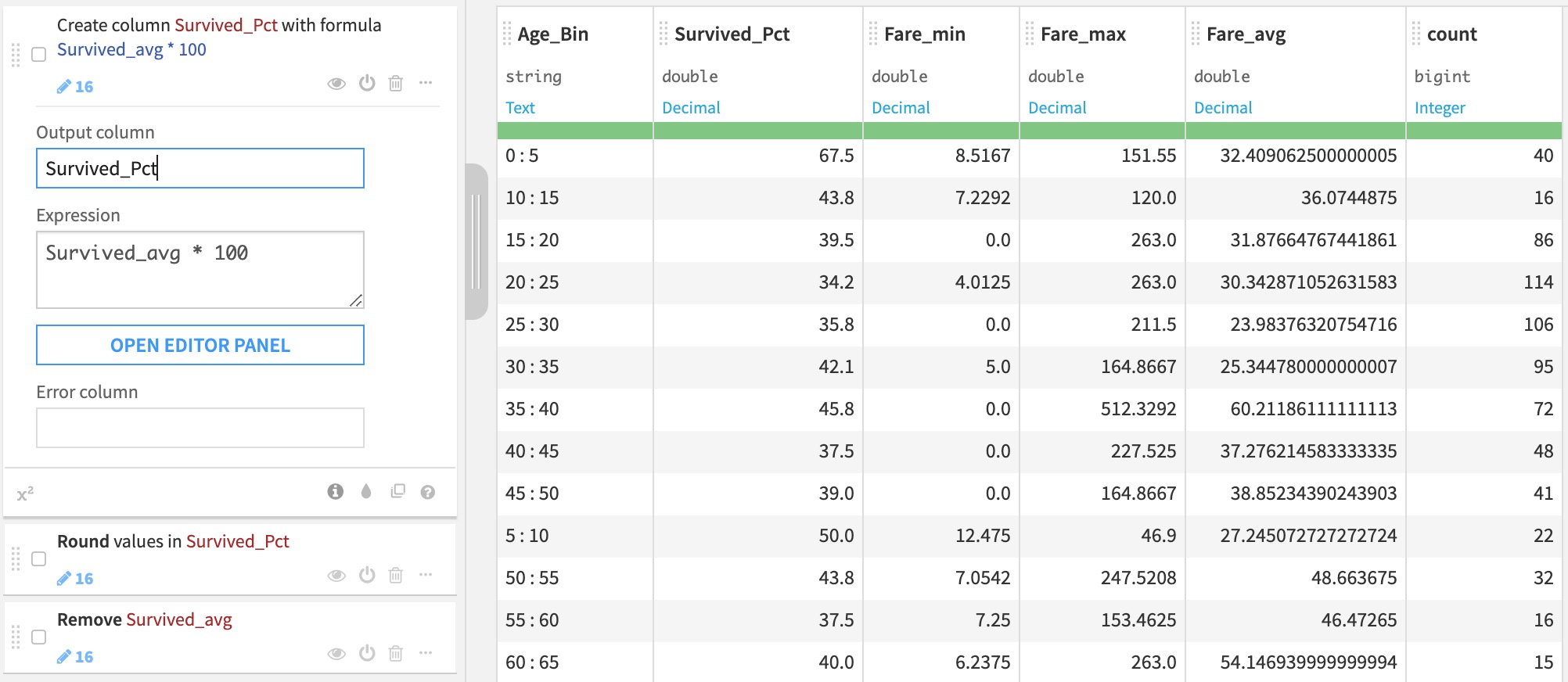

Our final prep step will be to create a second prepare recipe using the output of the group as the input. In this recipe we'll create a formula that turns our Survived_avg column into a percentage by multiplying by 100 and rounding the result:

These prepare and group recipes are simple examples of the type of chained recipes that we might want to re-use as a single unit. Next, we'll look at the steps necessary to "package" these steps into a single reusable recipe.

Packaging as a Recipe

Creating a Scenario

Now that we've created a series of steps that we'd like to be able to reuse, the next step is to set up a scenario to define how our data should be processed in our flow.

In our project, we'll navigate to the Scenarios page and then click "+ CREATE YOUR FIRST SCENARIO", giving it a name of "Process".

In this scenario, under the Steps tab, we'll need to create a "Build" step to kick off the build of the datasets that we wish to process for our recipe (the far right set in our flow, called titanic_prepared_by_Age_Bin_prepared in my project). You'll notice that in this step, we've selected the "Force-rebuild" build mode to ensure that our entire flow will be processed with each scenario run.

The Application Designer

The final and most important step required to convert our project and recipe flow into a single reusable recipe is done in the "Application Designer" page in the Dataiku project.

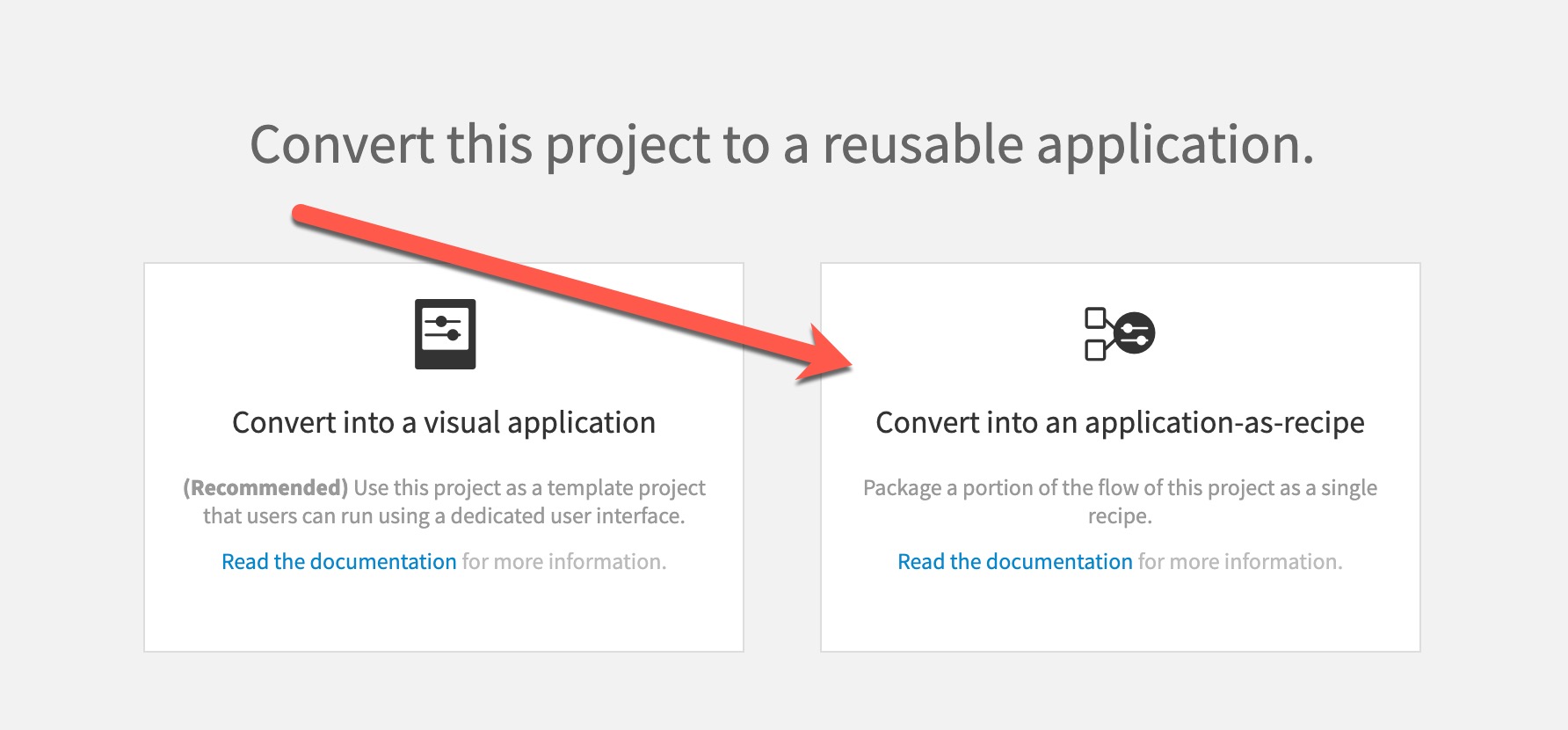

When you navigate to this page, you'll see a selection to either convert the project to a Visual Application or an application-as-recipe. For this tutorial, we're going to choose "application as recipe".

For a tutorial on how to use Visual Applications, see this blog post.

On the next page, we'll define the datasets that we'd like to be the input and output for our new recipe. In this case, the uploaded train.csv file is the input and the final prepared dataset is the final output of this flow.

We'll also specify how this application will process the data - using the scenario that we've previously defined. This tells Dataiku that when our new recipe wants to "build", it will run the scenario we've created which will perform the build steps.

For this tutorial, we're going to remove the content of the "Auto-generated controls" section since we won't need to allow for any advanced user interface interactivity with our project flow - but you could easily define and add parameters here with the help of this documentation.

Finally, we're going to add a little UI sparkle by clicking the "Use custom UI" link to add a custom user interface that will be exposed to any DSS users consuming our plugin. The HTML code I've entered is purely for visual sizzle and is not necessary for your future recipe development - but it does give you a good idea of how to add more instructional and contextual information about your plugin.

HTML Code:

<div>

<h4>

This recipe will compute statistics for age bins in the Titanic datasets.

</h4>

<div>

<img src='https://upload.wikimedia.org/wikipedia/commons/thumb/f/fd/RMS_Titanic_3.jpg/1280px-RMS_Titanic_3.jpg' />

</div>

</div>

And... the creation of our app as recipe is just that easy! Be sure to hit "Save" when you've finished. In the next section, we'll see how to utilize the work we've just done in a consuming project.

Using the Recipe

Now that we have our app-as-recipe project created, let's jump back to the Dataiku home screen and create a new project to make use of it. We're going to name this project "Titanic Demo". In this project, we'll start by importing the test.csv file from the downloaded Titanic data.

Once you've uploaded the test dataset (which will have the same schema as our previous "train" set), you can find our new app-as-recipe by clicking on "+RECIPE", then Applications=>Titanic Prep as shown in the screenshot above.

After selecting our new recipe, you should see selections for the input and output datasets as we defined in the Application Designer in the previous project.

After specifying the input and output, you'll see the custom UI we've created and can execute our custom app recipe by clicking the "RUN" button in the lower-left-hand corner of this screen!

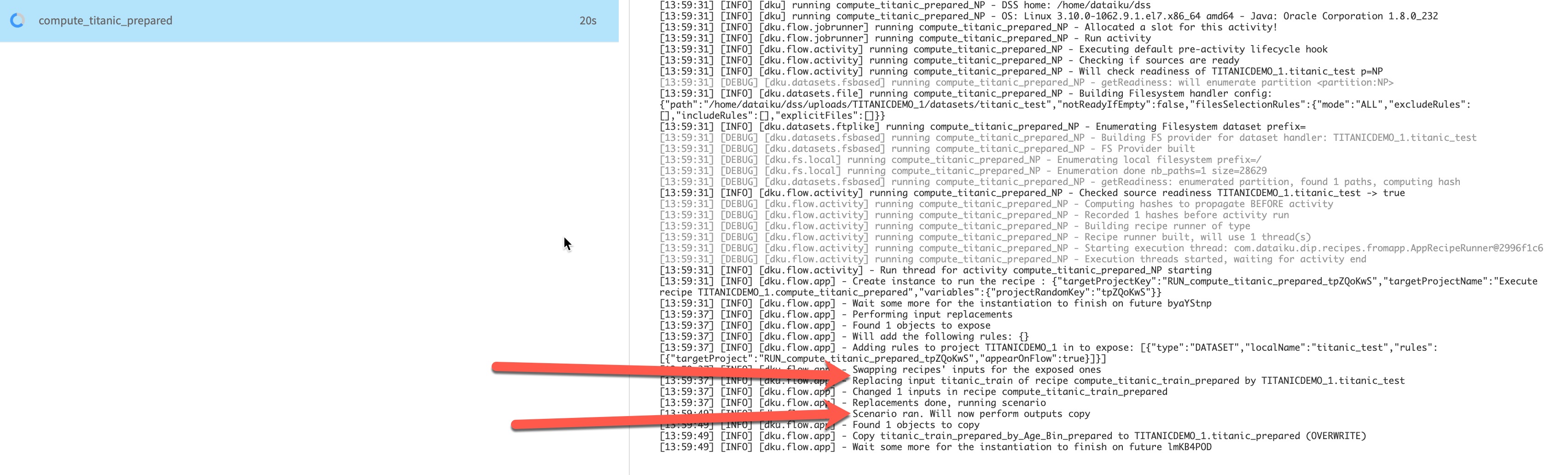

If you view the job logs for this execution, you'll see some indication of how the input datasets have been swapped from the original "train" to this "test" set, and also the execution of the scenario we've developed.

Finally, you'll see the output dataset of our app-as-recipe contains the prepared, grouped data that we had designed in the "Prep" project. This functionality is now totally reusable across your Dataiku projects for datasets with a similar schema.

Maintainability

As mentioned earlier, this approach is superior to copying and pasting multiple recipes because it allows for a single source of maintainability. In the case of our Titanic Prep recipe, we would only have to update the logic in the Titanic Prep project to provide new functionality to all of its consumers - making for a much easier and more maintainable solution.

Reusable Recipe Success

Dataiku has an amazing array of intuitive, easy-to-use visual (and code) recipes for performing advanced analysis and preparation work. One common challenge is building reusable recipe flows that consist of numerous individual recipes. As we've seen in this tutorial, with the "app as recipe" functionality, it's easy to build and maintain these recipes and utilize them in any number of projects across Dataiku teams and installations.

Learn more about how Snow Fox Data helps you maximize your investment in Dataiku.